Event Camera: the Next Generation of Visual Perception System

Event Camera: the Next Generation of Visual Perception System

Event camera can extend computer vision to scenarios where it is currently incompetent. In the following decades, it is hopeful that event cameras will be mature enough to be mass-produced, to have dedicated algorithms, and to show up in widely-used products.

Text Generation Models - Introduction and a Demo using the GPT-J model

Text Generation Models - Introduction and a Demo using the GPT-J model

The below article describes the mechanism of text generation models. We cover the basic model like Markov Chains as well the more advanced deep learning models. We also give a demo of domain-specific text generation using the latest GPT-J model.

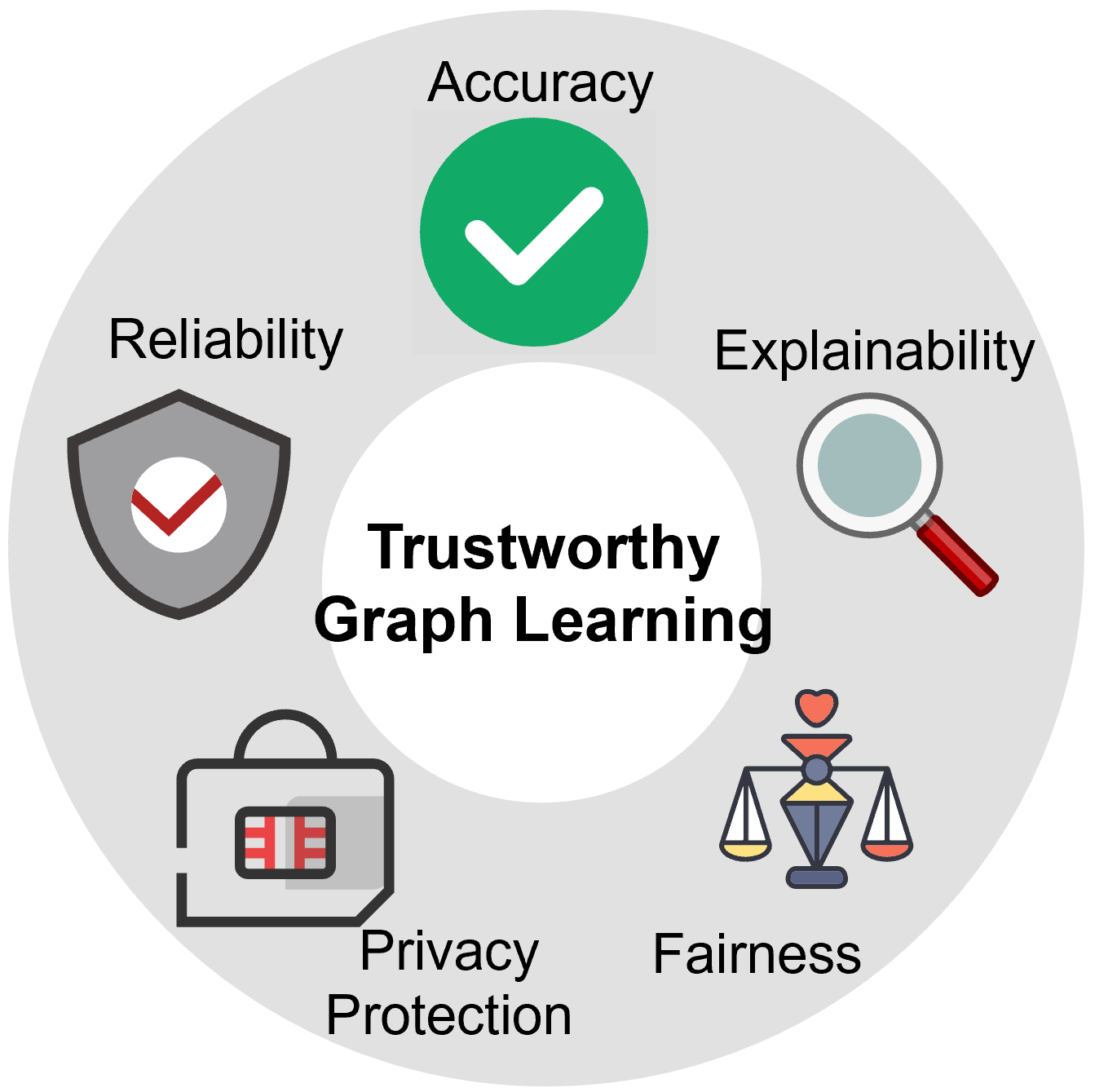

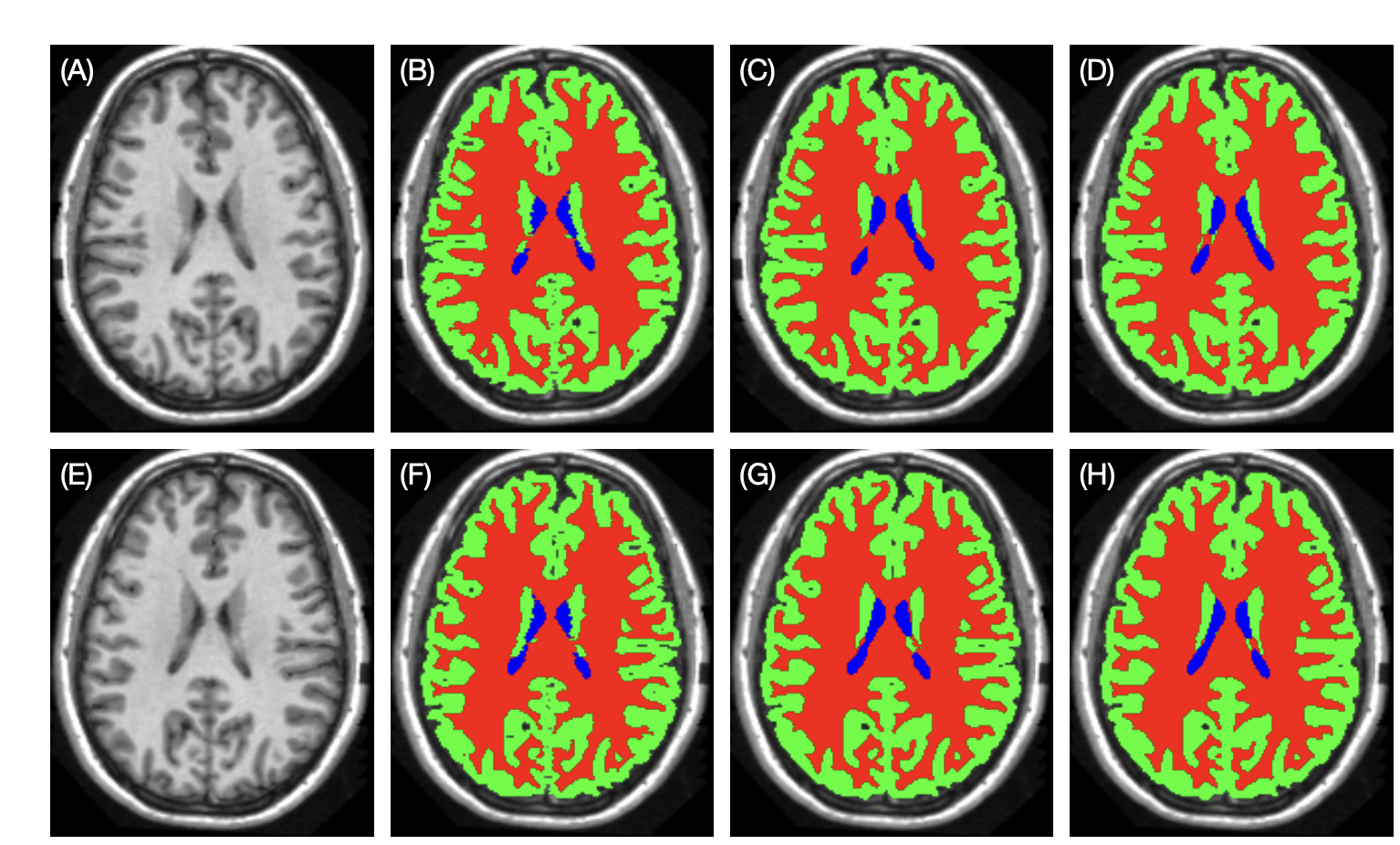

Group Equivariant Convolutional Networks in Medical Image Analysis

Group Equivariant Convolutional Networks in Medical Image Analysis

This is a brief review of G-CNNs' applications in medical image analysis, including fundamental knowledge of group equivariant convolutional networks, and applications in medical images' classification and segmentation.

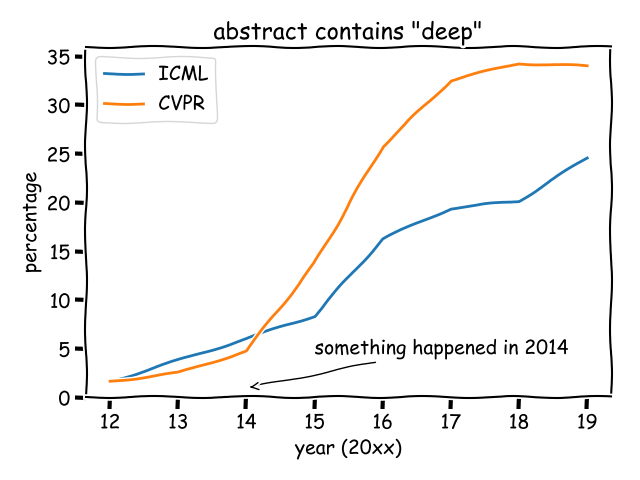

Co-Tuning: An easy but effective trick to improve transfer learning

Co-Tuning: An easy but effective trick to improve transfer learning

Transfer learning is a popular method in the deep learning community, but it is usually implemented naively (eg. copying weights as initialization). Co-Tuning is a recently proposed technique to improve transfer learning that is easy to implement, and effective to a wide variety of tasks.

High Dimension Data Analysis - A tutorial and review for Dimensionality Reduction Techniques

High Dimension Data Analysis - A tutorial and review for Dimensionality Reduction Techniques

This article explains and provides a comparative study of a few techniques for dimensionality reduction. It dives into the mathematical explanation of several feature selection and feature transformation techniques, while also providing the algorithmic representation and implementation of some other techniques. Lastly, it also provides a very brief review of various other works done in this space.

A New Paradigm for Exploiting Pre-trained Model Hubs

A New Paradigm for Exploiting Pre-trained Model Hubs

It is often overlooked that the number of models in pre-trained model hubs is scaling up very fast. As a result, pre-trained model hubs are popular but under-exploited. Here a new paradigm is advocated to sufficiently exploit pre-trained model hubs.

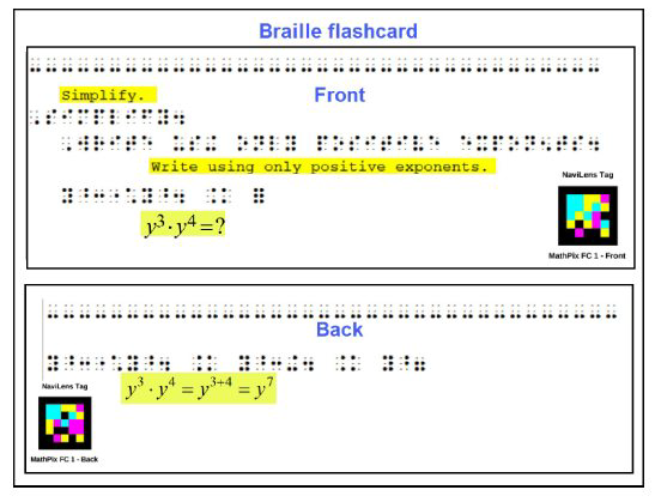

Using Mathpix and NaviLens to create accessible math flashcards

Using Mathpix and NaviLens to create accessible math flashcards

Students with print disabilities, due to blindness, low vision, learning disabilities or physical disabilities, can greatly benefit from accessible math flashcards and tutorials. Mathpix greatly reduces the amount of work required to create these by capturing text and math from a variety of sources making them ready for conversion to print or braille.

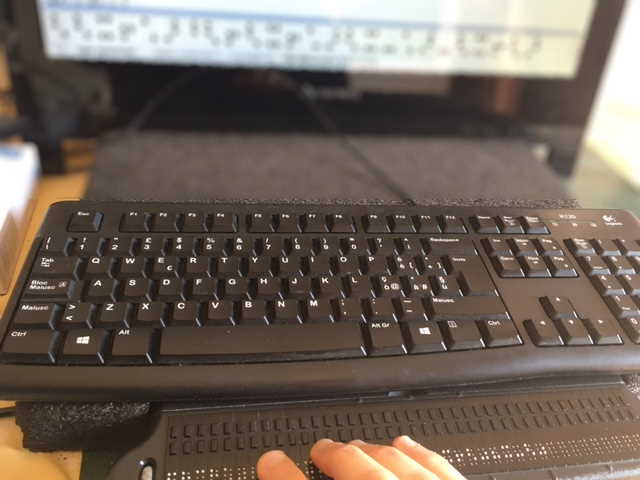

The use of Mathpix OCR with EDICO scientific editor to help blind Students in STEM education

The use of Mathpix OCR with EDICO scientific editor to help blind Students in STEM education

In this tutorial we'll show how Mathpix OCR is helpful to instantly transpose math and science assignments both in braille and speech. We'll use the free EDICO Scientific Editor to demonstrate how a math assignment can be imported using Mathpix technology, and how it can be solved using a Refreshable Braille Display.

That's everything! Take me back to the top.